Adaptive dissimilarity measures, dimension reduction and visualization

Learning Vector Quantization (LVQ) for supervised dimension reduction

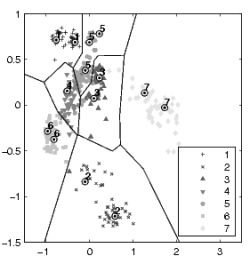

LVQ with an adaptive metric and limited rank (LiRaM LVQ) provides a supervised tool for dimension reduction. The left picture shows the global linear transformation of the 7 class UCI segmentation data to two dimension with a different number of prototypes per class learned by LiRaM LVQ.

LVQ with an adaptive metric and limited rank (LiRaM LVQ) provides a supervised tool for dimension reduction. The left picture shows the global linear transformation of the 7 class UCI segmentation data to two dimension with a different number of prototypes per class learned by LiRaM LVQ.Supervised Nonlinear dimension reduction

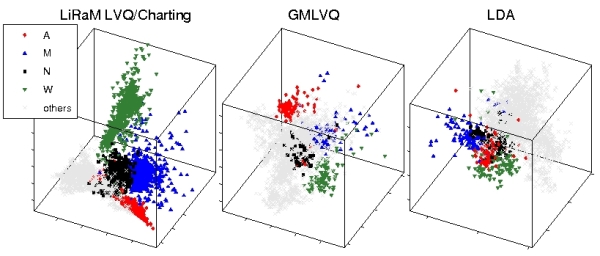

The localized version of LiRaM LVQ learns local linear projections attached to each prototype. These local linear projections can be combined in a nonlinear way by the charting method of Brand (Charting a manifold [Brand 2003]). The picture shows some results of the nonlinear dimension reduction into the 3 dimensional space of the UCI Letter data set and compares with results based on a global linear projection (GMLVQ) and Linear Discriminance Analysis (LDA). The overview emphasizes some similar letters.

The localized version of LiRaM LVQ learns local linear projections attached to each prototype. These local linear projections can be combined in a nonlinear way by the charting method of Brand (Charting a manifold [Brand 2003]). The picture shows some results of the nonlinear dimension reduction into the 3 dimensional space of the UCI Letter data set and compares with results based on a global linear projection (GMLVQ) and Linear Discriminance Analysis (LDA). The overview emphasizes some similar letters.

Unsupervised Nonlinear dimension reduction

Recently, we present an extension of the Exploratory Observation Machine (XOM) for structure-preserving dimensionality reduction. Based on minimizing the Kullback-Leibler divergence of neighborhood functions in data and image spaces, this Neighbor Embedding XOM (NE-XOM) creates a link between fast sequential online learning known from topology-preserving mappings and principled direct divergence optimization approaches.